👋 Hello!

Oh man, it’s been so hot in London recently. Houses in the UK are built to live in the middle of the temperature distribution: not too hot, not too cold. Anything outside the realm of -5C to 25C doesn’t get handled well. Shout out to the people working all day in 35 degree heat without air conditioning.

If this is your first time here, hello! I’ve spent the past 7 years working for high growth companies. My path has followed a ‘do-what-needs-to-be-done’ path: software engineering, data science, machine learning, with a little product management thrown in for good measure. I’ve picked up some useful experience at the intersection of these disciplines, and I’d love to share it with you.

The goal of this newsletter is to discuss techniques for building better software and being more effective in high growth companies. I mix in longer form content — think a short blog post that you could read in 5 minutes — with some of my favourite links. Here’s some really popular posts from the past few months:

Stephen

Why is dbt so important?

I joined Monzo in March 2019 to run the Data Engineering team. Last summer, we migrated Monzo's entire analytics infrastructure to dbt from a homegrown solution. This post explains why we took a bet on dbt, and why I think it's one of the most important data tools around.

Where have we come from?

The 'looseness' of SQL

SQL is a very ‘loose’ language. It doesn’t naturally push you towards good software engineering practises around re-use of code, abstraction and layering, and separation of responsibility. There are often many ways to accomplish the same objective.

Users are left to choose their own adventure with respect to defining how different SQL files relate to each other, and how they run them in the correct order. Many organisations develop their own tools for running SQL, and managing relationships between various files. These tools incur high maintenance costs.

Additionally, these tools are a great example of ‘non-differentiated heavy lifting’: being great at running SQL generally doesn’t provide any competitive advantage in the market.

Testing is challenging

Testing is challenging in SQL. There's no tooling or primitives that make this particularly easy or encouraged. Users often have to build a custom way to test that their models are correct. This doesn’t create value.

Lack of machine readability

It's very hard to introspect SQL files for the actual schema they will generate. You can use a SQL parser to drive and derive structure, but this is complex. There are moves in this direction with projects like ZetaSQL, but it's still early days.

Comments are often kept in the code. They are useful, but duplication of what a particular field means is necessary for tools like Looker.

So, what is dbt?

dbt, as stated by the CEO himself, is ‘a tool to help you write and execute the data transformation jobs that run inside your warehouse’. It’s made out of two sections:

A compiler: you use a mixture of SQL, and Jinja to define your transformations in SQL. You can reference other models to build on top of them, instead of referring to tables. dbt then compiles everything down to the SQL that needs to run.

A runner: dbt understands the links between each model because of these references, creating a directed acyclic graph or DAG. It then runs your SQL against your data warehouse in the correct order.

Below shows the user experience on the command line. Write some slightly different looking SQL, and type dbt run. Simples! We’ll get into the many other features next.

How does it help?

SQL first

Data warehouses are a really effective way to efficiently transform data. I’d argue that you don’t really need to do much transformation before your data gets into a warehouse anymore. Load it in as raw a format as possible, and transform through SQL.

So, why not use Python, or other languages? SQL is arguably one of the most popular programming languages in the world. Many folks from across many disciplines know enough SQL to be productive. This isn’t the case with more traditional programming languages. SQL has a low learning curve to becoming productive in. Additionally, it's more focused to the job at hand, as opposed to being a general purpose language.

At Monzo, we encourage everyone to do data transformation in SQL, and take a good hard look when people are using other programming languages to do it in. We’d rather trade off absolute power and customisability in return making the 99% use case dramatically better.

SQL-centric

dbt code is all the SQL your data warehouse supports, plus some extra functionality. You don't feel like you're writing in a custom framework. This aids with onboarding and maintainability.

Structured relationships between models

A dbt model is a SQL statement. You can build a model from another model by referring to it, using the ref function.

This is simple, but extremely powerful. dbt now understands how your models relate to each other as a graph. Previously, any relationship between models was implicit, and ordering of execution would need to be provided by the programmer.

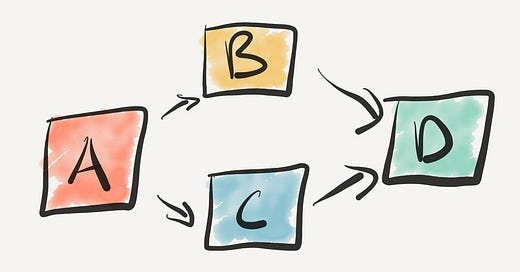

This means that dbt can run them in the correct order and run tests at the correct time. In the above example, dbt will run A. If that completes successfully, it will run B and C in parallel, running D after they have both completed.

If you want to get more advanced, you can parse this graph and perform structural analysis on it, and so on. Machine readability is powerful!

Modularity

A nice way to think about dbt models is to think of them as pure functions. They should accept some input data, and satisfy some clear objective without side effects, ideally in a simple manner. Structured relationships, in combination with other features, encourages modularity.

This modularity means that you can compose extremely complex models out of a set of individually simple models. This aids maintainability, and helps force clarity.

De-coupling data location from materialisation

Data from a staging environment is often not representative to test against, in the same way that it might be in software engineering. It’s very helpful to be able to use production data to develop.

dbt allows you to de-couple the place that you read data from (or the source) from the place that you write it to, or ‘materialise’ the output of the queries you are running. This means that everyone can have their own development dataset to use, that is completely decoupled from the production dataset!

Testing is a first-class citizen

Testing is a well-known technique in software engineering, but is still uncommon in data analysis and transformation. It’s a fundamental technique for ensuring correctness.

dbt allows two forms of testing: data tests, and schema tests. I find it useful to liberally scatter schema tests: it helps highlight when any invariants are broken.

Promoting testing to be a first class citizen helps up the quality bar for models written using dbt.

Easy continuous integration

To supercharge your tests, run them automatically on every change you submit to your source code repository of choice and don't allow the merging of code that fails tests.

De-coupling source data and materialisation means that running continuous integration tests becomes trivial. You can create a fresh environment per CI run, run all of your dbt models, run the tests, and delete the environment. To repeat: all of the changes that you made will be written to a completely fresh environment that is decoupled from production.

This allows you to ensure that your changes haven’t broken anything, which greatly increases reliability when you run it in production. This has historically been very challenging to do with SQL: you’d need to parameterise all of the tables that you were creating. dbt makes this a breeze.

We also use the graph that dbt outputs to enforce various checks on the models themselves. For example, all models must be tagged with a model_type, and that all models of type X must have a run tag with a certain set of values. This enforces consistency in code, and allows downstream tooling to make assumptions about what it will be dealing with.

De-coupling business logic from ‘plumbing’

By plumbing, I mean all of the mechanics of running the SQL to create something in the real world. dbt allows you to determine how your model is materialised, entirely separately from the SQL that is run. This means that you can switch between the following with ease:

ephemeral: models that depend on this model have the model ‘folded’ in as a Common Table Expression, or CTE.view: the model is created as a view. Models that depend on this model select from this view.table: the model is created as a table. Models that depend on this model select from this table.incremental: the model is initially created as a table. New rows are inserted. Existing rows are updated. You can exclude performing large amounts of work. Models that depend on this model select from this table.

There are a few benefits to this. First, clarity. The business logic remains clear, and the boring mechanics of how the models are materialised is hidden away. Second, flexibility. If you want to turn a table into an incremental model for performance reasons, that’s a very simple change which you can experiment with quickly.

Adding user-specific behaviour through tags

Tags are the unsung superpower in dbt. They are a list of user-provided key/value pairs. You can use tags to imbue meaning on a model, or set of models, that dbt does not natively support.

For example, we use the run tag at Monzo to delineate when we should run the model. Users tag their models with one of hourly, nightly, or weekly. When it comes to running the models later on, we simply select all models that are tagged with the appropriate run tag, and run them.

This means that users don’t have to care about how their model runs: it just does. This allows for clear contracts between data engineers and data analysts. Analysts write dbt models, data engineers figure out how to run them.

Other tags that could be useful to you:

importance: some models may be trivial, some may be critical to your business and should go through more rigorous change control. Tagging them as such provides context to people developing against it.needs_refactoring: This allows you to perform analysis over your codebase to understand whether code quality is getting better or worse. In combination withimportance, it would allow you to tell if business critical models have an unsustainable level of technical debt.

Get-out hatches

A beautiful nuance to dbt is that you can override a lot of the default behaviour with your own changes. Fancy changing how a particular materialisation works? You can do that!

This allows you a ‘get-out’ hatch to be able to tweak and tune it to your liking if the default behaviour doesn’t suit.

Documentation

Every dbt model has a SQL file with the code of the model, and a YAML file that describes the fields, and any associated tests. This means that documentation remains close to the model in a structured format, instead of adhoc comments next to the code. dbt ships with a way of rendering these docs, which you can serve to your organisation as a static site.

A subtle but important benefit is that this documentation is machine readable. This allows you to parse this documentation, and push it out to other tools like Looker: allowing a single place to document a field.

What is still challenging?

Trade-offs exist everywhere in software engineering. Beware any posts that provide 100% praise or criticism. The world is often grey.

Now that I've finished my soliloquy, here’s a few things that are challenging in dbt.

Mocking out input data

In traditional software engineering, a way of testing whether your code is correct is by providing a set of inputs, and asserting that you get the expected outputs after you put them through a function.

This form of ‘mocking out’ input data in dbt is technically possible, but challenging to do.

Working with thousands of models

dbt doesn’t perform well when you have thousands of models in a single project. Compilation times take a while, and remove from the snappy interactive experience that you’re used to.

I imagine this is an uncommon problem, exacerbated by the fact that Monzo has thousands of individual events which we build data models from.

Summary

The combination of the above makes dbt an extremely strong tool. I believe it will become 'the default' in the next few years, if it isn't already there, and the next billion dollar company in the space.

If you choose not to use dbt, you’ll probably waste time building a less-fully featured, buggy implementation of it yourself. Give it a serious look.

Best of the internet 🔗

What happens when I search for flights? - Tim Rogers

A nice dive into the key concepts behind flight search engines. Please do more of these!

Reservoir sampling - Florian Hartmann

Reservoir sampling is a beautiful algorithm to select k random elements from an unbounded stream of elements. This blog post explains it in far greater detail.

Dear Google Cloud: Your Deprecation Policy is Killing You - Steve Yegge

A passionate argument about the power of backwards compatibility. You’re an infrastructure company — don’t break stuff people are using!

For what it’s worth, I believe the backwards compatibility promises of Go is a huge contributor to the popularity of the language. Code I wrote 6 years ago compiles just fine.

That’s all from this week’s High Growth Engineering. If you enjoyed it, I’d really appreciate it if you could do one of the following:

Share it with a friend that would find it useful.

Follow me on Twitter: @sjwhitworth

Subscribe: just hit the button below.

All the best,

Stephen

Hi Stephen, one of my teammates passed me this article and I'm curious to hear if our recent product improvements have changed your opinion on the point about thousands of models.

We spent most of 2021 speeding things up, and I'm hoping you can tell!